Candyland

What do computational processes have to do with the Game of Destiny?

Readers of a certain demographic will remember the board game Candyland. In Candyland, players compete in a race along a colorful track. On your turn, you draw a card, then move your pawn to the next space matching the card’s color. There are a few other rules, but they’re all straightforward variations on that one.

Candyland says it’s for ages 3 and up, and it caters to that young audience by having no strategy elements whatsoever. Players in Candyland don’t make choices; the rules simply tell them what to do. If a game is, as Civilization creator Sid Meier says, “a series of interesting decisions,” then Candyland isn’t even a game. It’s a preschool lesson in rule-following made palatable by a heaping spoonful of sugar.

Still, if Candyland were only notable for having no decisions, that would hardly make it unique among games. Plenty of kids’ games are won or lost based solely on chance—dreidel and snakes and ladders come to mind.

No, the thing that really makes Candyland interesting is that not only is there no strategy involved—there’s arguably no random chance either. Yes, the deck of cards is shuffled, but crucially, the rules state that this happens before the game begins. That means if you know the initial conditions of the game (i.e. the order of the deck and the turn order of the players) you can perfectly predict what is going to happen.

As my friend Josh used to say, Candyland is a Game of Destiny.

What does this have to do with software?

In the previous post, I introduced computational processes. In this one, I’m going to argue that a game of Candyland is a computation. Because it looks very different from a typical computation, it is a good starting point for us to probe the boundaries of the concept, and consider more deeply what it means to compute. Along the way, we’ll learn about three important properties of the processes that inhabit computers—properties that Candyland shares:

Processes are deterministic by default.

Processes can be suspended and resumed.

Processes are not dependent on any particular medium.

A Software Implementation of Candyland

First off: if a game of Candyland is a computation, what is it computing?

The answer is pretty straightforward: it’s computing the answer to the question “who wins?” We can model Candyland as a function that takes a deck and a number of players, and returns a number indicating which player reaches the end of the track first. Here it is outlined in TypeScript:

// candyland.ts

interface Card {

// ...

}

interface InitialConditions {

deck: Card[];

players: number;

}

function winner(i: InitialConditions): number {

// ...

}This function spoils the suspense inherent in a traditional game of Candyland, by straight up revealing who wins without telling the story of how it happened. If we were going to implement a Candyland computer game for humans to play, we probably wouldn’t use a function like winner. We’d want something that can go one turn at a time, like this:1

interface GameState {

deck: Card[];

pawnPositions: number[];

whoseTurn: number;

}

function takeTurn(state: GameState): GameState {

// ...

}This solution is more flexible, as the winner function can be implemented in terms of takeTurn. It just has to initialize the game state and then call takeTurn repeatedly until a winner is identified:

function winner(initial: InitialConditions): number {

let gameState = {

deck: initial.deck,

pawnPositions: Array(initial.players).fill(0),

whoseTurn: 0,

};

while (!winnerAt(gameState)) {

gameState = takeTurn(gameState);

}

return winnerAt(gameState);

}

function winnerAt(gameState) {

return gameState.pawnPositions.findIndex(pos => pos === GOAL);

}Of course, as we saw in the post on behavior, the winner function doesn’t have to be implemented this way, as long as it always returns the correct answer. E.g. maybe it could take shortcuts to determine the winner without playing every turn. I don’t see any such shortcuts, but I’m not going to claim that none exist. Exercise for the reader :)

Three Properties of Processes

Earlier, I teased three properties of processes. To recap, these are:

Processes are deterministic by default.

Processes can be suspended and resumed.

Processes are nonphysical entities, and are not dependent on any particular medium.

Let’s consider each of these through the lens of Candyland.

Determinism

In a deterministic process, the end state is fully determined by the initial state. Thus, if you create several processes with the same initial state, they’re all going to get the same answer—no matter when they run, or whose computer they’re running on.

Here is a shell command that starts a deterministic process which sums the integers from 1 to 10:

node -e 'console.log(

Array(10).fill()

.map((_, i) => i + 1)

.reduce((a, b) => a + b)

)'The initial state of this process is simply the JavaScript code passed to it on the command line. As the process runs, it augments this state with many additional variables and data structures as it parses and evaluates the JS code. The process is extraordinarily complex—but it always gives the same answer. Assuming node refers to the same program on both of our machines, you and I will both get 55 every time we run this command.

In every OS I’m aware of, processes are deterministic by default. You can get nondeterminism, of course, but you have to ask for it explicitly. (More on how that works later.) Determinism makes processes much easier to debug—imagine if every time you stepped through a + b in a debugger you got a different answer!—but it limits what they can do. A deterministic process cannot ask the user for input, call a web service, or even look at the current time, since the results of those operations are not predictable from the process’s initial state.

Candyland is a deterministic process. You can tell because the winner function I outlined earlier is a pure function. Its return value depends only on the argument you pass to it. If you call it with the same argument over and over, you’ll get the same result—every time, everywhere.

In computing, deterministic processes are great because they’re easy to predict, test, and debug. It’s worth asking, though: if the end state of our process is fully determined by the start state, why run the process at all? Why not just jump straight to the conclusion?

The answer is that we might not know what the end state is going to be before we start. Moreover, there might not be any quicker way to find out what the end state will be than to run the process. Thus, we can view processes as revealing the hidden implications of their initial conditions.

In my head, I visualize this as a bit like protein folding. The initial state of the process is unstable; it “wants” to get to the end state. There’s a palpable tension, a feeling of suspense. As the process runs, the rules of the program drive the state, step by step, toward the goal. When the process reaches the final “low energy” state where the rules no longer cause it to change, the tension is resolved. Ahhh. We can relax.

When this step-by-step evolution is explicit, as in the takeTurn-based implementation of winner above, it can make the code easier to test and reason about. If our tests confirm that takeTurn behaves correctly for a representative set of game states, then winner is also likely to be correct. If there’s a bug in winner, we can probably isolate the bug to a single takeTurn call and then debug takeTurn.

For me, watching the intricate self-folding machinery of computations never gets old. It’s one of the reasons I love unit testing and test-driven development. Computation speaks to the same primal desire for tension and release that drives our love of games, music, and stories. Programming adds to this the satisfaction of making something that works.

Introducing Nondeterminism

As lovely as determinism is, most practical programs aren’t deterministic. Even a program as simple as the date command in macOS and Linux needs to output different results depending on when and where it is run, and thus can’t be deterministic. As another example: yarn init, which creates a new JavaScript project, prompts its user for information about the project, so it’s not deterministic either. Nondeterminism is everywhere in software.

Unfortunately, nondeterminism is also a major contributor to software bugs. While popular mythology attributes computer malfunctions to ghosts, demons, or other bogeys, their actual cause is invariably mundane: the software simply depends on conditions outside its control. Certain sequences of nondeterministic events lead to “bad states,” from which the process evolves in an undesirable direction. The nondeterminism makes these bugs difficult to reproduce and squash.

So, we who write software face a dilemma. Determinism is precious, but nondeterminism is necessary. Fortunately, operating systems like Linux give us a way to have our cake and eat it too. While processes are deterministic by default, they’re allowed to perform a special ritual that summons a nondeterministic effect. This type of ritual is called a system call.

The actual mechanism by which system calls work is both complex and irrelevant to our purposes here, and to be honest I don’t understand certain details all that well yet. So I’m not going to try to explain how system calls are implemented. I’ll just tell you how I mentally model their behavior.

Conceptually, a system call has two parts:

A request to the OS, which is simply data that the process puts in a well-known location where the OS will look for it. This might say something like “Please read 10 bytes from the file with ID 52, and put those bytes in my memory at address 1234.”

An instruction in the process’s code that says “PAUSE.”3

As an example, let’s imagine what happens to a process that makes the aforementioned request to read a file. As our scene opens, the CPU is going through the process’s code, instruction by instruction, deterministically updating the process’s state as appropriate. When the CPU gets to the PAUSE instruction, it stops executing the process’s code and (figuratively) hollers at the operating system to get its attention.

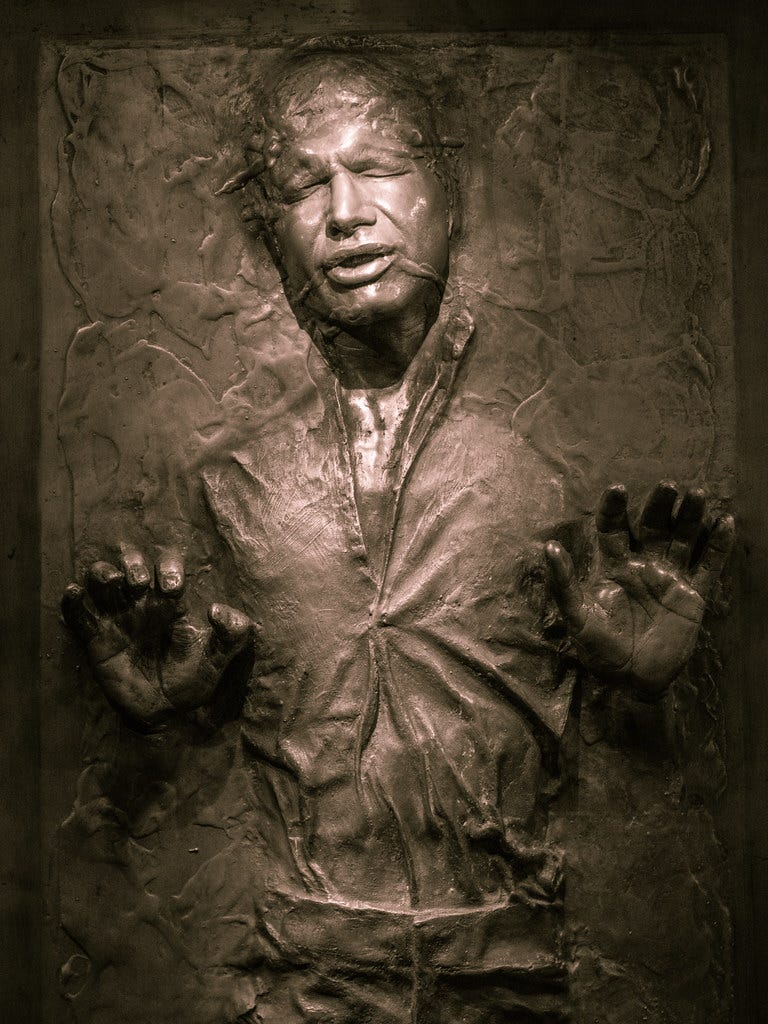

The OS walks over to the process to see what the matter is. It finds the process in a state of suspended animation, like Han Solo frozen in carbonite.

The OS isn’t particularly concerned about this one process—it has a lot of processes to wrangle, everything from systemd to firefox. So it chucks Mr. Solo into its cart of frozen processes, and hands the CPU a new process—one that’s in a more felicitous state to continue execution. The CPU starts running that process’s code, and away it goes.4

Meanwhile, the OS wheels Solo over to its workbench, and takes a closer look at him. It notices the PAUSE instruction and finds the associated request to read 10 bytes from file 5. Okay, easy enough. Look up the bytes, copy them into address 1234 in Solo’s memory.

Solo might sit in the cart for a while longer before he gets time on the CPU again, but eventually, his turn will come around. When it does, the CPU resumes executing his code right where it left off—after the PAUSE instruction. Solo wakes up, finds the data he requested right where he wanted it, and continues on his deterministic way.

The extra ritual around system calls provides several benefits:

First, the OS can deny the process’s request if it’s trying to do something it shouldn’t be allowed to do—like reading a file it doesn’t have permission to read.

Second, system calls can be monitored, making it easier to see the nondeterministic inputs to a process that might affect its behavior. This makes debugging nondeterministic code significantly easier. The tool for doing this on Linux is strace. A similar program called dtrace is available for macOS.

Suspendability

The system call mechanism relies on an important property of processes: they can be suspended and resumed—potentially with long intervals of time in between. In addition to enabling system calls to work, the suspendability of processes means your computer can go to sleep when you’re not using it, by pausing all processes and shutting off power to the CPU. Suspendability also enables the OS to swap out the running process for a new one at any point—an ability known as preemptive multitasking—which prevents any one process from hogging the CPU.

Games of Candyland can be suspended and resumed as well. The players can take a lunch break in between turns, and the outcome of the game is unaffected. They can even record the state of the game in some other form (e.g. by writing down the order of cards in the deck and whose turn is next), pack up the cardboard, and resume play days later.

Candyland doesn’t have anything analogous to system calls—at least, not if you play by the rules. Still, one can imagine situations where an outside agent might manipulate the game state in response to external conditions. For instance, if you’re playing Candyland against Chewbacca and he’s behind, it might be wise to stack the deck to let the wookiee win.

Abstractness

The third and final property of processes we’re going to discuss today is their abstractness. By “abstract,” I mean “independent of any particular representation”.

Consider the following thought experiment: you and I start a game of Candyland. Halfway through, we break for lunch. Just in case anything disturbs the board while we’re out, we make a record of the game state before we leave. Lucky thing, too, because while we’re at lunch, your housemate Greg spills coffee on the board (dammit, Greg!) ruining it.

Fortunately for us, we live in the Age of Computers, so we fire up Tabletop Simulator and set up the game there. Then we continue right where we left off. Pretty soon, though, the determinism of the game becomes glaringly obvious, and we start looking for ways to make it less tedious. We decide that pair-programming on a Candyland winner function would be more fun than actually playing the game. So we do that. Once our code is working, we plug in our recorded game state to find out which of us was fated to win all along.

Now, my claim is that we played a single game of Candyland, using four different media to do so:

humans + rulebook + cardboard

recorded game state

humans + rulebook + software

software

Which is to say: the game, the living, evolving process that computes the winner, survived the translation from each medium to the next. The game doesn’t care whether it’s running on people or computers. It will compute the same winner no matter how it is represented physically.

This description frames the game itself as some kind of nonphysical entity. You could even compare it to a ghost or a spirit, and indeed I’m hardly the first to make that observation (Sussman, Abelson, and Sussman did too, on the second page of Structure and Interpretation of Computer Programs). My goal here, however, is not to ponder the metaphysical ramifications of this observation, but to explore its practical consequences for software engineering.

The first consequence of processes’ nonphysicality or abstractness is that operating systems are free to arrange their processes’ states in any way that’s convenient. For example, when a Linux system hibernates, all process state is moved out of main memory and saved to disk. This allows the computer to completely power off, and resume all the processes the next time it starts up.

Even while the computer is awake, the abstractness of processes comes into play. Abstractness frees the computer to store process state in different ways. State can live in main memory, in the CPU registers, or in the caches in between. It can even be swapped out to disk if the computer is low on physical RAM. All of this shuffling around is invisible to the application programmer. Unless we’re writing very high-performance code, we don’t even have to know it exists. The abstractness of processes also means that programming language runtimes are free to change the underlying mechanism that makes the process go—as when a web browser invisibly switches from interpreting JavaScript to JIT-compiling it.

Wrap-up

Well, that was kind of a ramble. My apologies for the long post. What did we learn, in all of that?

We started with the idea that a game of Candyland is a computation, very similar to the processes that inhabit our computers.

We made this idea concrete by outlining a pure

winnerfunction for Candyland.We explored the implications of three properties that both processes and Candyland possess:

Processes are deterministic by default. Determinism makes processes easier to reason about, test, and debug, because it means that two processes with the same initial state will always compute the same result.

Processes can be suspended and resumed. This allows the operating system to task-switch between processes and introduce nondeterminism in a controlled way.

Processes are not dependent on any particular medium. This allows us to reason about them in the abstract, without having to know how they’re implemented in silicon.

All of this is leading up to something, I promise. (Well, except for the Candyland analogy—I probably won’t bring that up again.) In the next few posts, I plan to write about effects—a useful view of system calls and similar mechanisms—and then about the implications of effects for code design and testing. If you’d like to be notified of my future posts, you can sign up with your email below.

Yeah, yeah, I know, this game state type doesn’t account for the rule where players can get stuck on a space and lose their turn. I wanted to keep it simple—it’s just an example, after all.

Unix processes refer to open files by temporary ID numbers called file descriptors.

One misleading aspect of this little vignette is that it portrays the OS as independent of the CPU. In reality, the OS is just more code, and it’s running on the same CPU as the Han Solo process.