Effects

Interacting with the outside world

Previously, we discussed system calls, and how they allow processes to access information that isn’t computable from the process’s initial state. For example, processes can use system calls to get the contents of a file, the current time, or a random number. Today, I want to introduce effects, an abstracted view of system calls and similar mechanisms. I’ll define an effect as an interaction between a process and the outside world. I’ll then analyze the tradeoff inherent in using effects: namely, that effects are necessary to all practical software, but also dangerous, hard to reason about, and hard to test. Finally, I’ll sketch a few approaches to mitigating those downsides.

What is an effect?

As we saw in the post on Candyland, processes are abstract. They exist in a realm of pure thought, simply deriving new information from old. Though they need physics to run, they aren’t dependent on any specific physical medium. A game of Candyland will compute the same winner whether it’s running on toddlers or silicon.

When played via its traditional medium (cardboard + humans), Candyland enmeshes the players within its computation. It doesn’t need a separate user interface to show who’s ahead and whether anyone’s won yet, because the computing machinery interacts with the players already, by its very nature. A computerized Candyland game, on the other hand, cannot be a purely abstract computation. Manually probing the voltages in your computer to figure out what state it’s in is, shall we say, no longer popular.

These days, software needs a user interface—which is to say, the software has to come down out of the clouds of Platonic idealism at some point and make specific physical hardware actually do stuff. E.g. it has to write text to a terminal, draw pixels on a screen, play sound through a speaker, check the position of the mouse, and listen for keypresses. In short, it has to have effects.

An effect is any interaction between software and hardware. Effects are the bridge between abstract cogitation and the physical world.

System calls are one way to implement effects, though there are other mechanisms (e.g. signals). Here are some examples of effects off the top of my head:

Creating a file

Reading data from a file

Getting the current time

Listening for a keypress

Getting the current cursor position

Requesting an HTML page from a web server

Generating a random number

Running a database query

Sending an email

Waiting 5 seconds

Launching a new process

Controlling a robot

Drawing graphics on the screen

Playing sound

Adjusting the volume

Printing a document

Shutting down the computer

As you can see from this list, basically all of the most interesting things software can do fall into the category of effects.

Digression on Turing-Completeness

A software system is termed Turing-complete if it can compute anything that can be computed. This definition implies that all Turing-complete systems are equivalent: each of them can simulate any of the others.

Computer scientists love this concept, but when I was first introduced to it, I found it a bit perplexing. Someone told me that SQL was Turing-complete. “So you could build Tetris in SQL?” I asked. They gave me a puzzled look. “That’s not really what Turing-complete means.”

The problem is, Tetris relies on effects. It needs to react to the passage of time, sense keypresses, play sound effects, and draw colored boxes on the screen. SQL, on its own, doesn’t provide mechanisms for any of those things. The idea of Turing-completeness, like Alan Turing’s eponymous machine, concerns itself only with abstract computation. Effects are nowhere in the picture.

This is not to say that abstract computation or Turing-completeness are bad ideas—quite the contrary. The omission of effects provides great focus and clarity. But you have to understand the limitations that come with any view of the world. And the limitation of the computational view is that computations, on their own, don’t do anything. That a system is Turing-complete tells you very little about whether you can build practical software entirely within that system.

Problems with Effects

In the previous post, I compared computational processes to ghosts or spirits. If processes are spirits, effects are obviously sorcery. All the usual warning labels apply.

The main danger with effects is that, because they affect the real world, you generally can’t take them back. Software effects have killed patients, blown up rockets, and thrown away hundreds of millions of dollars. That kind of stuff is hard to undo. It is therefore imperative to keep effects on a very short leash.

Unfortunately, effectful code tends to be difficult to reason about and predict. There are two reasons for this.

The first reason is that effects introduce nondeterminism, so running the same code multiple times with the same input can produce different outputs. Such code is prone to “works on my machine” bugs—even if it does the right thing in one context, it might break when moved to a different context.

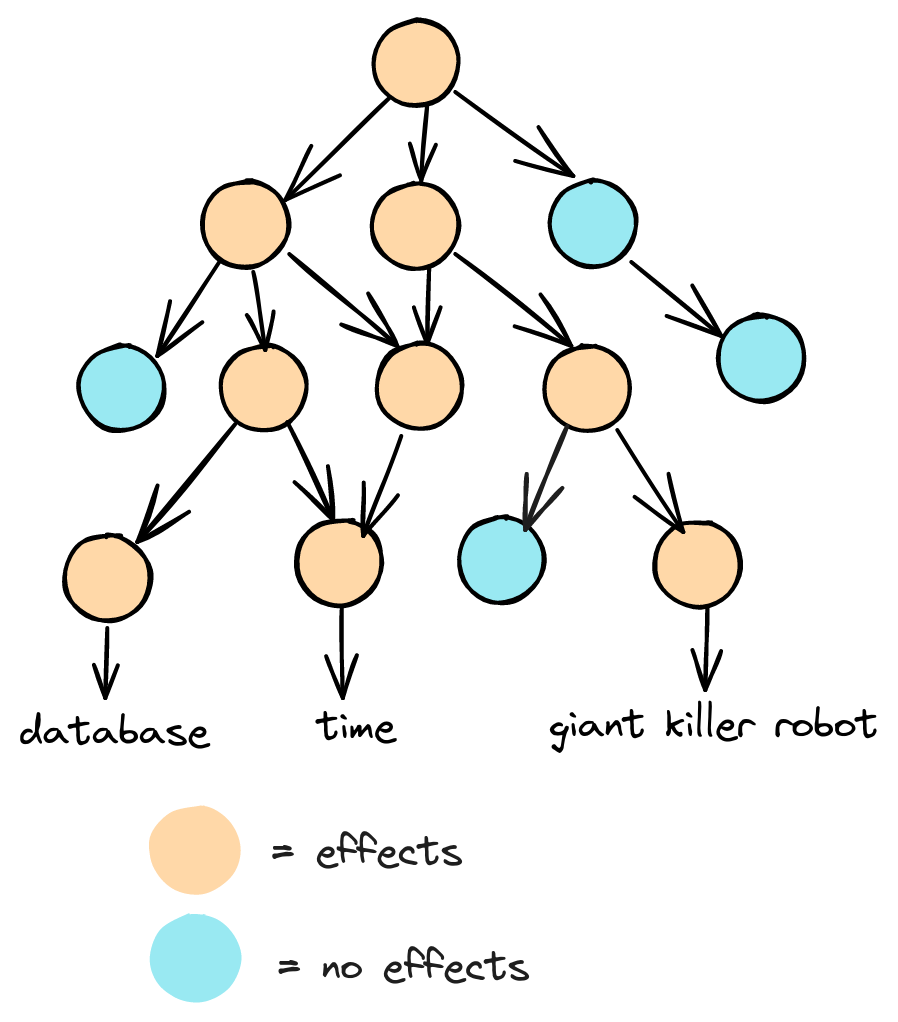

The second reason is that effects can be hidden in the code—buried in a deep stack of function calls. If A calls B and B has an effect, then calling A might have that effect, too. Extend this to a long chain—A calls B calls C calls D—and you have a recipe for unpleasant surprises. “I had no idea calling that function would delete the production database!”

Effects are thus a contaminant that spreads, virus-like, up the call graph. Left uncontained, this contamination will spread until almost the entire system is made of effectful code—i.e. code that’s dangerous and hard to predict. When the effects in our software escape our intellectual grasp, changing the code becomes scary, and development of the system slows to a crawl.

We might hope that by carefully testing our software, we could gain some control over effects, but that hope is in vain. Effectful code is the most difficult code to test—precisely because effects reach out into the physical world, often in ways that tests can’t observe or control.1

This collision of forces—the danger and invisibility of effects on one hand, and the difficulty of pinning them down with tests on the other—is the motivation for much of software design. By designing our software carefully, splitting and joining parts along the right lines, we can tame the effect/testing problem, though we can’t completely eliminate it.

Testing Around Effects: An Example

Here’s an example of some code that has an effect. Our teammate Eliza wants to receive a report of how many users signed up last week, broken down by country. The code outlined below generates the report and emails it to her:

// This code isn't the best design. We'll see better ways of writing

// it in future posts.

async function sendSignupReport() {

const newUsers = await database.query("users")

.where("signup_date", ">", weeksAgo(1));

const reportData = breakdownByCountry(newUsers);

const reportHtml = formatReportAsHtml(reportData);

await sendEmail({

from: "noreply@example.com",

to: "eliza@example.com",

subject: "Weekly signup report",

body: reportHtml,

});

}This code depends on at least three effects:

weeksAgohas to look at the current time, to determine what time it was a week ago.database.queryreads from the production database.sendEmailsends an email.

We’d really like to test this code, because if we send an email with the wrong information, we can’t unsend it. However, testing it is difficult, because:

database.queryreads from the production database, which is constantly changing as new users sign up.sendEmailactually sends an email. Our tests would like to assert that the email contains the correct data, but to do that, we’d need to look in Eliza’s inbox, and she probably doesn’t want to give out her email password. (She also probably doesn’t want to get an email every time our tests run).weeksAgodepends on the current time, which is of course constantly changing as our test runs. So we can’t predict or control exactly what time will be used in the database query. That means we can’t predict the query results, which means we can’t predict what information will appear in the email. Uncertainty cascades.

How might we resolve these testing difficulties? There are several possible approaches:

We can surgically replace the effectful functions with mocks or fakes.

We can redesign the code to solve a more general problem. (Surprisingly, more general code is often easier to test.)

We can give in to nondeterminism, and loosen our assertions to give the code some wiggle room.

We can reimplement parts of the production code in our tests to compute the expected result.

We can separate the effectful and non-effectful parts of the code, and test each part in a different way.

In the next few posts, I’ll talk about each of these techniques in turn. Stay tuned.

Conclusion

To summarize:

An effect is any interaction between a software process and specific physical hardware, like a hard drive, display, or keyboard. Effects introduce nondeterminism and allow the software to act in the real world.

Effectful code is dangerous, because:

it might cause irreversible damage.

in any case, if your code does the wrong thing via an effect, someone’s gonna know.

Effectful code is hard to reason about, because:

it’s nondeterministic

effects can hide under many layers of function calls.

Effectful code is hard to test, because:

it’s nondeterministic, so setting up the test is hard.

tests can’t easily observe real-world effects like sending an email or playing an audio file, so asserting on the result is hard.

Effectful code contaminates its callers, making them effectful too. In the absence of countervailing factors (e.g. design) most of the code in a typical system will be effectful—and therefore dangerous, hard to reason about, and hard to test. With proper software design, though, we can control and contain effects, ensuring the continued health of the system.

Introductory manuals on test-driven development tend to gloss over this point, leading to much gnashing of teeth later as students struggle to apply TDD to their messy, effectful reality.